What is Artificial Intelligence?

Artificial Intelligence (AI) refers to the creation of intelligent machines that can perform tasks that would typically require human intelligence, such as understanding natural language, recognizing objects, making decisions, and solving complex problems. AI involves the development of algorithms, computer programs, and systems that can process and analyze data in a way that simulates human intelligence.

The concept of AI has been around for several decades, but recent advances in machine learning and deep learning techniques have led to significant progress in the field. AI is now being used in a wide range of applications, including speech recognition, image recognition, natural language processing, autonomous vehicles, robotics, and more.

There are several different types of AI, including:

- Rule-based AI: This type of AI involves creating a set of rules that can be used to solve problems or make decisions. It works by following a set of predefined rules to arrive at a solution.

- Machine learning: This is a type of AI that involves creating algorithms that can learn from data. Machine learning algorithms can be trained on large datasets to identify patterns and make predictions based on that data.

- Deep learning: This is a subset of machine learning that involves creating neural networks that can learn from large datasets. Deep learning algorithms can be used to solve complex problems like image recognition and natural language processing.

AI has numerous applications in fields such as healthcare, finance, education, manufacturing, and more. It can help to automate tedious tasks, improve decision-making processes, and even save lives by identifying diseases early.

However, there are also concerns about the ethical implications of AI, such as the potential for bias in algorithms, job displacement, and the risk of creating autonomous machines that could be harmful to humans. As the field continues to evolve, it will be important to carefully consider these implications and develop responsible AI systems.

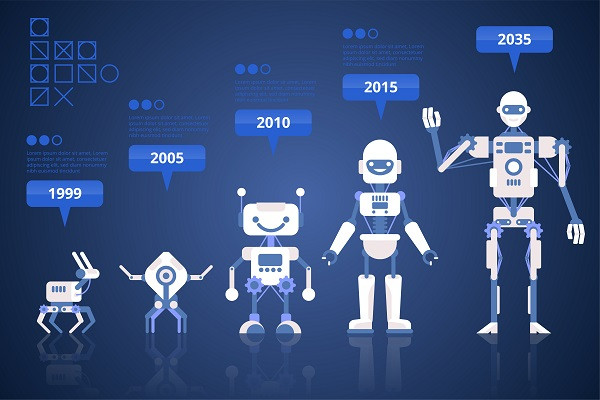

History and Evolution of Artificial Intelligence

The history of artificial intelligence (AI) can be traced back to ancient times when the Greek myths spoke of the creation of artificial beings, such as Pygmalion’s statue brought to life by the goddess Aphrodite. However, the modern era of AI started in the mid-20th century with the development of electronic computers and the idea of using them to simulate human intelligence.

In 1956, John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon organized the Dartmouth Conference, where they coined the term “artificial intelligence” and set out to develop intelligent machines that could learn and reason like humans. This marked the beginning of the AI research field, and it quickly gained momentum with significant funding from the government and the private sector.

In the early years, AI researchers focused on rule-based systems, where they created a set of rules that a computer could follow to solve a particular problem. However, these systems were limited in their ability to solve complex problems, and researchers soon turned to machine learning, where algorithms could learn from data and improve over time.

In the 1980s, expert systems gained popularity, which was AI systems that could mimic the decision-making abilities of human experts in a particular domain. These systems were used in fields like medicine, finance, and engineering.

The late 1990s saw the rise of artificial neural networks, which were inspired by the structure and function of the human brain. Neural networks could learn from data and were used in applications such as handwriting recognition and image classification.

In the 21st century, deep learning emerged as a powerful technique for training neural networks with large datasets. Deep learning has enabled breakthroughs in areas like speech recognition, image and video recognition, and natural language processing.

AI has also seen significant progress in robotics and automation, with robots being developed that can perform a wide range of tasks, from manufacturing to healthcare.

Today, AI is being used in a variety of applications, including self-driving cars, virtual assistants, and fraud detection. However, there are also concerns about the ethical implications of AI, such as the potential for bias in algorithms and the impact of automation on jobs.

Overall, the history and evolution of AI have been marked by significant advances and breakthroughs, with the field continuing to evolve and transform the way we live and work.

Types of Artificial Intelligence

There are several different types of Artificial Intelligence (AI) based on their capabilities and functionalities. Here are some of the major types of AI:

- Reactive Machines: These are the simplest types of AI systems that can only react to specific situations based on pre-programmed rules. They do not have the ability to learn from experience or make decisions based on past events. Examples of reactive machines include Deep Blue, the chess-playing computer, and Roomba, the robotic vacuum cleaner.

- Limited Memory AI: These types of AI systems can use past experiences to make decisions or perform tasks. They have the ability to store and recall data and use that data to make informed decisions. Self-driving cars and personal assistants like Siri and Alexa are examples of limited-memory AI.

- Theory of Mind AI: These types of AI systems can understand and interpret human emotions, beliefs, and intentions, allowing them to interact more effectively with humans. Theory of Mind AI is currently in the early stages of development.

- Self-Aware AI: These types of AI systems are capable of understanding their own existence and emotions, and can think about their own thoughts and feelings. Self-aware AI is still purely hypothetical, and no practical examples of it currently exist.

- Narrow or Weak AI: These types of AI systems are designed to perform a specific task or solve a particular problem. They are the most common type of AI currently in use, and examples include speech recognition software, image recognition software, and chatbots.

- General or Strong AI: These types of AI systems are designed to have the ability to perform any intellectual task that a human can do. General AI does not yet exist and is still purely hypothetical.

In summary, AI can be classified into Reactive Machines, Limited Memory AI, Theory of Mind AI, Self-Aware AI, Narrow or Weak AI, and General or Strong AI, based on their capabilities and functionalities. Each type of AI has its own strengths and limitations, and the development of AI is continuing to evolve in exciting ways.

The Ultimate Guide of Snapdragon 8 Gen 2 Processor: Exploring its Features and Capabilities

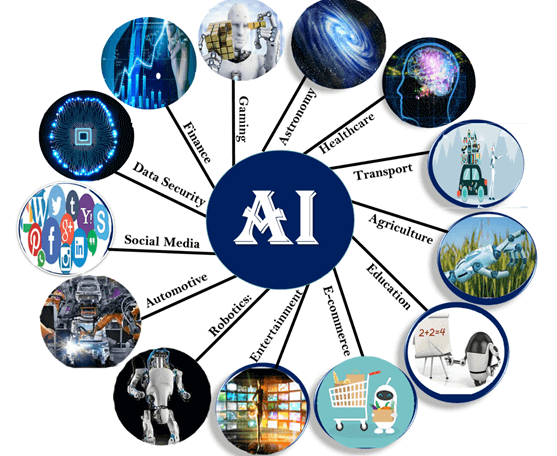

Applications of Artificial Intelligence

Artificial Intelligence (AI) is being used in a wide range of applications across various industries. Here are some of the major applications of AI:

- Healthcare: AI is being used to develop predictive models that can help diagnose diseases and detect potential health issues in patients. It is also being used to develop personalized treatment plans based on a patient’s medical history and health data.

- Finance: AI is being used to analyze financial data and make investment recommendations. It is also being used to detect fraud and identify potential risks in financial transactions.

- Transportation: AI is being used to develop self-driving cars, which can improve safety and reduce the risk of accidents. It is also being used to optimize traffic flow and reduce congestion in cities.

- Retail: AI is being used to develop personalized marketing campaigns and improve customer experiences. It is also being used to optimize inventory management and supply chain operations.

- Manufacturing: AI is being used to optimize production processes, reduce downtime, and improve product quality. It is also being used to develop predictive maintenance models that can help prevent equipment failures.

- Customer Service: AI-powered chatbots are being used to provide customer support and answer frequently asked questions. This can help improve customer satisfaction and reduce the workload on human customer service representatives.

- Education: AI is being used to develop personalized learning plans and provide adaptive learning experiences for students. It is also being used to automate administrative tasks and streamline operations in educational institutions.

- Agriculture: AI is being used to develop precision farming techniques that can help optimize crop yields and reduce waste. It is also being used to monitor soil health and detect potential problems in crops.

Overall, AI is being used to improve efficiency, optimize operations, and enhance decision-making across a wide range of industries and applications. As AI technology continues to advance, it is expected to have an even greater impact on our daily lives and transform the way we work and live.

Machine Learning Algorithms

Machine Learning (ML) algorithms are the backbone of many Artificial Intelligence (AI) systems. Here are some of the major types of ML algorithms:

- Supervised Learning: In supervised learning, the algorithm is trained on a labeled dataset, where the desired output is already known. The algorithm learns to predict the output based on the input features. Examples of supervised learning algorithms include Linear Regression, Logistic Regression, Decision Trees, Random Forests, and Support Vector Machines.

- Unsupervised Learning: In unsupervised learning, the algorithm is trained on an unlabeled dataset, where the desired output is not known. The algorithm learns to identify patterns and relationships in the data without any specific guidance. Examples of unsupervised learning algorithms include K-Means Clustering, Hierarchical Clustering, and Principal Component Analysis.

- Semi-Supervised Learning: In semi-supervised learning, the algorithm is trained on a dataset that contains both labeled and unlabeled data. The algorithm learns to identify patterns and relationships in the labeled data and then applies that knowledge to the unlabeled data. This approach is useful when labeling data is expensive or time-consuming.

- Reinforcement Learning: In reinforcement learning, the algorithm learns to take actions in an environment to maximize a reward signal. The algorithm learns through trial and error and receives feedback in the form of a reward or punishment for each action it takes. Examples of reinforcement learning algorithms include Q-Learning and Deep Reinforcement Learning.

- Deep Learning: Deep learning is a subset of ML that uses neural networks with multiple layers to learn complex patterns and relationships in data. Deep learning algorithms are used for image recognition, natural language processing, and speech recognition. Examples of deep learning algorithms include Convolutional Neural Networks, Recurrent Neural Networks, and Deep Belief Networks.

Each ML algorithm has its own strengths and weaknesses, and the choice of algorithm depends on the specific problem to be solved and the nature of the data. By selecting and applying the appropriate ML algorithm, AI systems can learn to make accurate predictions, identify patterns and trends, and automate decision-making processes.

Deep Learning Techniques

Deep Learning is a subset of Machine Learning that involves training deep neural networks with multiple layers to learn complex patterns and relationships in data. Here are some of the major Deep Learning techniques used in Artificial Intelligence (AI) systems:

- Convolutional Neural Networks (CNNs): CNNs are used for image and video recognition tasks. They learn to extract features from images by applying filters or convolutional layers to the input image. CNNs are widely used in applications such as object detection, facial recognition, and self-driving cars.

- Recurrent Neural Networks (RNNs): RNNs are used for natural language processing and sequential data analysis tasks. They learn to process sequences of data by maintaining a memory of past inputs and predicting future outputs. RNNs are widely used in applications such as speech recognition, machine translation, and text generation.

- Generative Adversarial Networks (GANs): GANs are used for generating new content such as images, videos, and audio. They learn to generate new data by pitting two neural networks against each other, one that generates fake data and the other that tries to detect it. GANs are widely used in applications such as image and video synthesis, data augmentation, and data privacy.

- Autoencoders: Autoencoders are used for data compression and dimensionality reduction tasks. They learn to reduce the size of the input data while preserving its essential features. Autoencoders are widely used in applications such as image and video compression, anomaly detection, and feature extraction.

- Deep Reinforcement Learning: Deep Reinforcement Learning combines deep neural networks with reinforcement learning to enable AI systems to learn to take actions in an environment to maximize a reward signal. This technique is widely used in applications such as game playing, robotics, and recommendation systems.

Each Deep Learning technique has its own strengths and weaknesses, and the choice of technique depends on the specific problem to be solved and the nature of the data. By applying appropriate Deep Learning techniques, AI systems can learn to perform complex tasks such as recognizing faces, understanding natural language, and generating new content.

Natural Language Processing

Natural Language Processing (NLP) is a branch of Artificial Intelligence (AI) that deals with the interaction between computers and human languages. NLP techniques are used to enable computers to understand, interpret, and generate human language. Here are some of the major NLP techniques used in AI systems:

- Text Classification: Text classification is the process of assigning predefined categories or labels to a given text document. It is widely used in applications such as spam filtering, sentiment analysis, and topic classification.

- Named Entity Recognition (NER): NER is the process of identifying and extracting named entities such as persons, organizations, and locations from a given text document. It is widely used in applications such as information extraction, event detection, and recommendation systems.

- Sentiment Analysis: Sentiment analysis is the process of determining the emotional tone or sentiment of a given text document. It is widely used in applications such as social media monitoring, customer feedback analysis, and brand reputation management.

- Machine Translation: Machine translation is the process of automatically translating text from one language to another. It is widely used in applications such as language learning, international communication, and document translation.

- Speech Recognition: Speech recognition is the process of converting spoken words into text. It is widely used in applications such as voice assistants, speech-to-text transcription, and hands-free control of devices.

- Text Summarization: Text summarization is the process of generating a shorter version of a given text document while retaining its most important information. It is widely used in applications such as news aggregation, document summarization, and search engine result snippets.

Each NLP technique has its own strengths and weaknesses, and the choice of technique depends on the specific problem to be solved and the nature of the text data. By applying appropriate NLP techniques, AI systems can learn to analyze and understand human language and perform tasks such as sentiment analysis, document translation, and speech recognition.

Computer Vision

Computer vision is a branch of Artificial Intelligence (AI) that deals with enabling machines to interpret and understand images and videos as humans do. It involves the use of algorithms and mathematical models to analyze and interpret digital images and videos. Here are some of the major applications of computer vision in AI:

- Object Detection: Object detection is the process of identifying and localizing objects in an image or video. It is widely used in applications such as autonomous driving, surveillance, and robotics.

- Image Classification: Image classification is the process of categorizing an image into a predefined class or category. It is widely used in applications such as medical image analysis, quality control in manufacturing, and facial recognition.

- Image Segmentation: Image segmentation is the process of dividing an image into multiple regions or segments based on their visual properties. It is widely used in applications such as image editing, medical imaging, and satellite imagery analysis.

- Scene Reconstruction: Scene reconstruction is the process of creating a 3D model of a scene from a series of 2D images. It is widely used in applications such as augmented reality, virtual reality, and 3D printing.

- Object Tracking: Object tracking is the process of following a moving object over time in a video. It is widely used in applications such as traffic monitoring, sports analysis, and security surveillance.

- Gesture Recognition: Gesture recognition is the process of identifying and interpreting human gestures, such as hand movements and facial expressions. It is widely used in applications such as human-computer interaction, gaming, and sign language translation.

Each computer vision technique has its own strengths and weaknesses, and the choice of technique depends on the specific problem to be solved and the nature of the images or videos. By applying appropriate computer vision techniques, AI systems can learn to analyze and interpret digital images and videos and perform tasks such as object detection, image segmentation, and scene reconstruction.

Robotics and Automation

Automation and Robotics are two important fields in the realm of Artificial Intelligence (AI). Robotics deals with the design, construction, and operation of robots, while automation involves the use of machines to perform tasks without human intervention. Here are some of the major applications of robotics and automation in AI:

- Manufacturing: Robotics and automation are widely used in manufacturing to automate repetitive and dangerous tasks such as welding, painting, and assembly. By using robots and automation, manufacturers can improve productivity, reduce costs, and enhance quality.

- Healthcare: Robotics and automation are increasingly being used in healthcare to perform tasks such as surgery, diagnosis, and rehabilitation. By using robots and automation, healthcare providers can improve patient outcomes, reduce costs, and enhance safety.

- Agriculture: Robotics and automation are being used in agriculture to automate tasks such as planting, harvesting, and crop monitoring. By using robots and automation, farmers can improve yields, reduce labor costs, and optimize resource utilization.

- Logistics: Robotics and automation are widely used in logistics to automate tasks such as sorting, packing, and shipping. By using robots and automation, logistics companies can improve efficiency, reduce errors, and enhance customer satisfaction.

- Education: Robotics and automation are being used in education to teach students about robotics, engineering, and programming. By using robots and automation, educators can enhance student engagement, promote creativity, and develop critical thinking skills.

- Service Industry: Robotics and automation are increasingly being used in service industries such as hospitality and retail to automate tasks such as cleaning, inventory management, and customer service. By using robots and automation, service providers can improve efficiency, reduce costs, and enhance the customer experience.

Robotics and automation are transforming the way we live and work, and are enabling us to automate tasks that were once thought to be impossible. By combining robotics and automation with AI, we can create intelligent systems that can learn and adapt to changing environments, and perform tasks with greater efficiency and accuracy.

Ethics and Concerns in Artificial Intelligence

Artificial Intelligence (AI) is a rapidly growing field that has the potential to transform our society in many positive ways. However, as with any emerging technology, there are also ethical and societal concerns that must be addressed. Here are some of the major ethical concerns in AI:

- Bias: AI algorithms can be biased if they are trained on biased data or if the algorithm itself is designed with biased assumptions. This can lead to discrimination against certain groups of people, such as minorities or women.

- Privacy: AI systems can collect and process large amounts of personal data, which raises concerns about privacy and data protection. There is a risk that this data could be misused or fall into the wrong hands.

- Autonomy: Autonomous AI systems, such as self-driving cars, raise ethical concerns about responsibility and accountability. If an autonomous system causes harm or makes a mistake, who is responsible?

- Transparency: AI systems can be complex and difficult to understand, which raises concerns about transparency and accountability. It is important that AI systems are designed to be transparent and explainable so that their decisions can be understood and challenged.

- Employment: AI has the potential to automate many jobs, which raises concerns about unemployment and inequality. It is important that society prepares for the impact of AI on the job market and takes steps to ensure that everyone benefits from the advances in technology.

- Safety: Autonomous AI systems, such as drones or robots, raise concerns about safety and security. It is important that these systems are designed to be safe and secure, and that they are tested thoroughly before being deployed.

It is important that we address these ethical concerns in AI and develop guidelines and regulations to ensure that AI is developed and used in a responsible and ethical way. This will require collaboration between governments, industry, academia, and civil society to ensure that AI is used to benefit everyone and that the risks and challenges are addressed in a responsible way.

Intel Core I5 12450H (12th Generation) Processor Features, ANTUTU, Geenbech Score

Future of Artificial Intelligence

The future of Artificial Intelligence (AI) is exciting and full of possibilities. Here are some of the key areas where AI is expected to have a significant impact in the years to come:

- Healthcare: AI is expected to revolutionize healthcare by enabling the personalized treatment, predicting diseases, and improving the accuracy of diagnoses. AI-powered medical devices and health monitoring systems will help to improve patient outcomes and reduce costs.

- Transportation: AI is expected to play a major role in the development of autonomous vehicles, which will transform the way we travel and reduce accidents caused by human error. AI-powered traffic management systems will help to improve traffic flow and reduce congestion.

- Finance: AI is already being used in finance to detect fraud, predict market trends, and automate customer service. In the future, AI will continue to play a major role in finance, enabling more efficient and accurate financial services.

- Education: AI is expected to transform education by enabling personalized learning and providing new ways to teach and learn. AI-powered tutoring systems and educational robots will help to improve student outcomes and reduce costs.

- Energy: AI is expected to play a major role in the development of renewable energy sources, enabling more efficient and cost-effective energy production and distribution.

- Agriculture: AI is already being used in agriculture to improve crop yields and optimize resource utilization. In the future, AI will continue to play a major role in agriculture, helping to feed a growing global population while minimizing the environmental impact of agriculture.

Overall, the future of AI is bright and full of opportunities. However, it is important that we address the ethical and societal concerns associated with AI, and ensure that AI is developed and used in a responsible and ethical way. By doing so, we can harness the full potential of AI to improve our lives and build a better future for all.

Impact of Artificial Intelligence on Society

Artificial Intelligence (AI) has the potential to transform society in many positive ways, but it also raises concerns about its impact on people and society as a whole. Here are some of the key areas where AI is expected to have an impact on society:

- Employment: AI has the potential to automate many jobs, which raises concerns about unemployment and inequality. While AI is expected to create new jobs, there is a risk that the benefits of AI will not be evenly distributed.

- Education: AI is expected to transform education by enabling personalized learning and providing new ways to teach and learn. However, there is a risk that AI could widen the gap between those who have access to high-quality education and those who do not.

- Healthcare: AI is expected to revolutionize healthcare by enabling the personalized treatment, predicting diseases, and improving the accuracy of diagnoses. However, there is a risk that AI could widen the gap between those who have access to high-quality healthcare and those who do not.

- Privacy: AI systems can collect and process large amounts of personal data, which raises concerns about privacy and data protection. There is a risk that this data could be misused or fall into the wrong hands.

- Bias and discrimination: AI algorithms can be biased if they are trained on biased data or if the algorithm itself is designed with biased assumptions. This can lead to discrimination against certain groups of people, such as minorities or women.

- Autonomy: Autonomous AI systems, such as self-driving cars, raise ethical concerns about responsibility and accountability. If an autonomous system causes harm or makes a mistake, who is responsible?

It is important that we address these concerns and ensure that AI is developed and used in a responsible and ethical way. This will require collaboration between governments, industry, academia, and civil society to ensure that AI is used to benefit everyone and that the risks and challenges are addressed in a responsible way. By doing so, we can harness the full potential of AI to improve our lives and build a better future for all.

Advantages and Disadvantages of Artificial Intelligence

Artificial Intelligence (AI) has numerous advantages and disadvantages. Here are some of the key advantages and disadvantages of AI:

Advantages:

- Efficiency: AI can process large amounts of data quickly and accurately, enabling businesses and organizations to make better decisions and optimize their operations.

- Accuracy: AI can perform tasks with a high degree of accuracy, reducing the risk of human error.

- Personalization: AI can be used to personalize products and services, improving customer experience and satisfaction.

- Cost Savings: AI can automate repetitive and labor-intensive tasks, reducing costs and freeing up human resources for other tasks.

- Innovation: AI can be used to develop new products and services that would not be possible with human labor alone.

Disadvantages:

- Job displacement: AI has the potential to automate many jobs, which raises concerns about unemployment and inequality.

- Bias and discrimination: AI algorithms can be biased if they are trained on biased data or if the algorithm itself is designed with biased assumptions. This can lead to discrimination against certain groups of people, such as minorities or women.

- Lack of creativity: AI is good at performing repetitive tasks but lacks the creativity and problem-solving abilities of humans.

- Dependence: As we become more dependent on AI, there is a risk that we will lose our ability to perform tasks without the assistance of machines.

- Privacy and security: AI systems can collect and process large amounts of personal data, raising concerns about privacy and data protection. There is a risk that this data could be misused or fall into the wrong hands.

Overall, it is important that we address these advantages and disadvantages of AI and ensure that AI is developed and used in a responsible and ethical way. By doing so, we can harness the full potential of AI to improve our lives and build a better future for all.

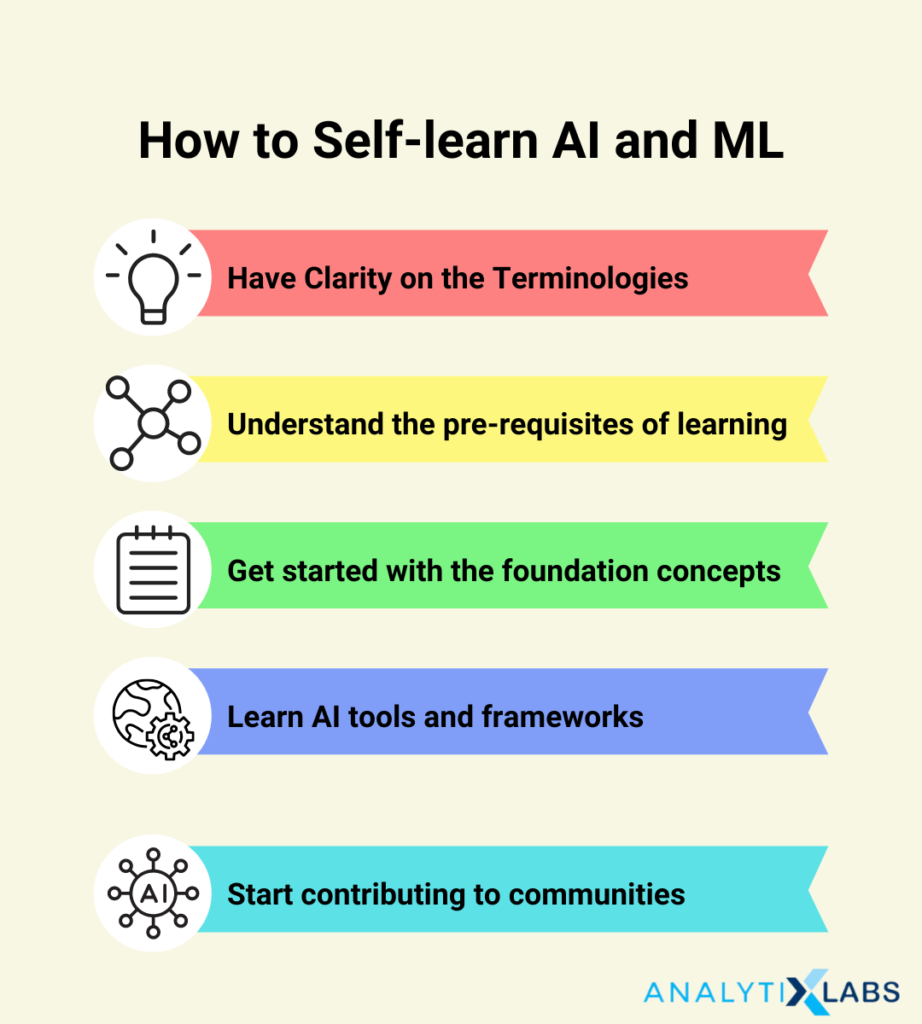

How to Learn Artificial Intelligence

Learning Artificial Intelligence (AI) requires a solid foundation in mathematics, computer science, and programming. Here are some steps to help you get started in learning AI:

- Learn the basics of programming: To get started with AI, you need to learn programming languages such as Python, Java, or C++. There are many online resources available to learn programming, such as Codeacademy, Udacity, and Coursera.

- Learn mathematics and statistics: AI is heavily based on mathematics and statistics. To understand AI algorithms, you need to be familiar with concepts such as linear algebra, calculus, probability, and statistics. You can learn these concepts through online courses or textbooks.

- Choose an area of focus: AI is a broad field, so it is important to choose an area of focus that interests you, such as machine learning, natural language processing, computer vision, or robotics.

- Take online courses and tutorials: There are many free and paid online courses and tutorials available to learn AI. Some popular ones include Andrew Ng’s course on Coursera, the TensorFlow website, and the PyTorch website.

- Participate in projects and competitions: To gain practical experience in AI, participate in projects and competitions, such as Kaggle, where you can work on real-world problems and compete with other AI enthusiasts.

- Join AI communities: Joining AI communities such as forums, online groups, and meetups can help you learn from experts, network with other AI enthusiasts, and stay up-to-date with the latest developments in the field.

- Practice and experiment: Finally, to become proficient in AI, you need to practice and experiment with different algorithms and techniques. Create your own projects and try to apply AI to real-world problems.

In summary, learning AI requires a solid foundation in mathematics, computer science, and programming, as well as choosing an area of focus, taking online courses and tutorials, participating in projects and competitions, joining AI communities, and practicing and experimenting with different algorithms and techniques. With dedication and hard work, anyone can learn AI and become proficient in this exciting field.

Famous Examples of Artificial Intelligence in Action

Artificial Intelligence (AI) is being used in various fields and has made significant progress in recent years. Here are some famous examples of AI in action:

- Siri and Alexa: These virtual assistants use natural language processing and machine learning algorithms to understand voice commands and perform various tasks, such as making phone calls, setting reminders, and providing information.

- Self-driving cars: Companies such as Tesla and Google are using AI to develop self-driving cars. These cars use computer vision and machine learning algorithms to analyze the environment and make decisions on steering, braking, and acceleration.

- Healthcare: AI is being used in healthcare to analyze medical images, diagnose diseases, and develop personalized treatments. For example, IBM’s Watson is being used to develop cancer treatments based on individual patients’ genetic profiles.

- Fraud detection: Banks and financial institutions use AI algorithms to detect fraudulent transactions by analyzing patterns and anomalies in transaction data.

- Recommendation systems: Websites such as Netflix and Amazon use AI to recommend products and services to customers based on their past purchases and browsing behavior.

- Social media: Social media platforms such as Facebook and Twitter use AI to analyze user behavior and preferences, personalize content, and detect and remove inappropriate content.

- Gaming: AI is used in gaming to create intelligent and adaptive opponents, as well as to create more immersive virtual worlds.

These are just a few examples of how AI is being used in various fields. As AI technology continues to advance, we can expect to see more innovative and exciting applications of AI in the future.

Conclusion

In conclusion, Artificial Intelligence (AI) has revolutionized the way we interact with technology and is rapidly transforming various industries, including healthcare, finance, transportation, and entertainment. AI is based on complex algorithms, including machine learning and deep learning, and is powered by vast amounts of data.

There are different types of AI, such as narrow or weak AI and general or strong AI. AI has many applications, such as virtual assistants, self-driving cars, healthcare, fraud detection, recommendation systems, and gaming. However, there are also concerns about the ethics and potential misuse of AI, such as job displacement, biased algorithms, and security risks.

It is essential to use AI responsibly and ethically to ensure that its benefits are maximized and its risks are minimized. To learn AI, one needs to have a solid foundation in mathematics, computer science, and programming and choose an area of focus. Finally, AI is still a developing field, and we can expect to see more exciting and innovative applications of AI in the future.

Thanks for reading this post (The Power of Artificial Intelligence: A Comprehensive Guide to AI’s Features and Capabilities). If any information in the information given in this post is wrong or missing, please comment in the comment box.

If you have any doubts or questions about “The Power of Artificial Intelligence: A Comprehensive Guide to AI’s Features and Capabilities”, please comment in the comment box.